Multi Agent Reinforcement Learning

Published:

This is a blog template credit to Marcus Dominguez-Kuhne

Standard Deep Reinforcement Learning

Deep Reinforcement Learning has made great strides in enabling computers to solve tasks such as multiplayer games. Reinforcement learning refers to goal oriented algorithm, which learn how to attain a complex objective over many steps. Reinforcement learning works to maximize the reward obtained by an agent, often in minimal time, with a portion of reward deducted per step. We model problems as POMDP (Partially Observables Markov Decision Processes) meaning that we action we take has the Markov property that its independent of the previous actions and that we can only observe part of the world state. This is intuitive from video games, where you may only see what’s on your screen currently, but the world state includes what’s behind you. During each iteration of training and completing the problem, we update our deep neural network according to the reward function to assign values to states and actions. Reinforcement learning works best with a dense reward function that can give you a reward at every step so that you have the most information about how a state action pair affects your overall reward. The algorithm used to play Dota 2 in a multi-agent setting, as will be described below, was PPO (Proximal Policy Optimizaiton) which is a policy gradient algorithm. Policy gradients target modeling and optimizing the policy directly. Since the focus of this blog post is how we can have agents work together, we will not be focusing on PPO, however you can checkout the following link for a good explanation: Proximal Policy Optimization Algorithms

Some problems that reinforcement learning has been shown to be good at tasks that take multiple steps to acheive and have a defined end goal. Examples of tasks that reinforcement learning works well on includes Atari games, robotic manipulation, and robotic bipedal motion. These scenarios are advantageous for reinforcement learning because we can define a clear reward. For example, with Atari games the reward function is often the final score received at the end of game. Reinforcement learning can also be used for real world applications, such as self-driving cars, where you can learn from visual input where the road is and teach your car to drive accordingly. Despite all these strengths of reinforcement learning, these are only for single agent applications and do not consider interactions with other agents, which is where multi-agent reinforcement learning comes in.

Multi-Agent Reinforcement Learning

Billions of people inhabit the world, each with their own goals and actions, but they’re still capable of coming together into teams and organizations in impressive displays of collective intelligence. This is called multi-agent learning, with many individual agents acting independently, yet must learn how to interact and cooperate with other agents. This problem is really difficult since with other agents also learning how to cooperate the training environment is constantly changing. Furthermore, some other problems we run into with this is that in a multi-agent environment how do we determine who is assigned what credit for training, and how do we incentivize agents to coordinate with each other?

Multi-player video games are a great set of problems for multi-agent reinforcement learning. Agents have been trained on games such as Dota2 and Quake 3 (multiplayer modes) and have been shown to beat professional human players. Additionally, there have been cases shown where AI can play with a team of humans too and cooperate. These games are great testing grounds because they require that agents work together to beat the other team. The advantages of testing algorithms in these environments is that we can do years of training in months and be able to rapidly test and train different algorithms. Furthermore, you can set agents to play against other agents to train both sets of agents and further increase training efficency. Additionally, games such as Dota2 are much harder than games such as Go because of the long time horizons, partial observability, and the high dimensionality of observaton and action spaces with complex rules. Not to mention that Dota 2 has multiple playable characters, each with different abilities.

Video Game Application: Dota 2

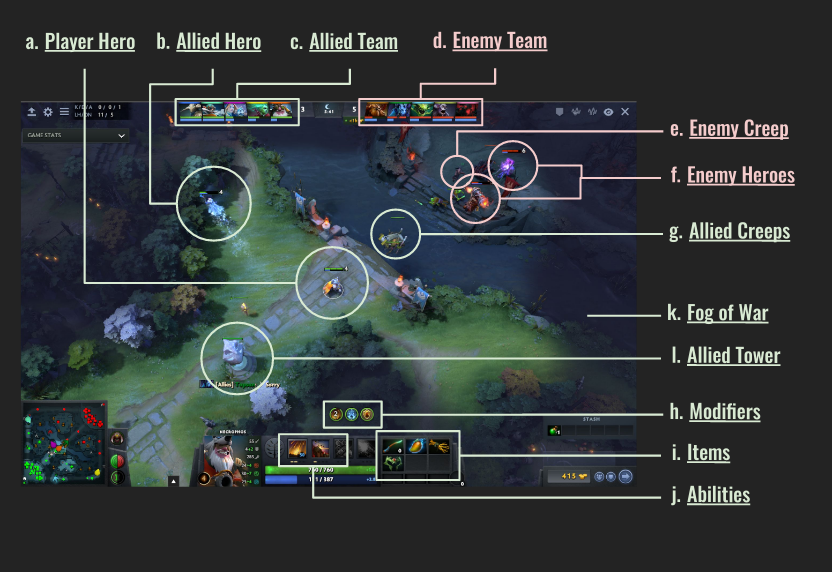

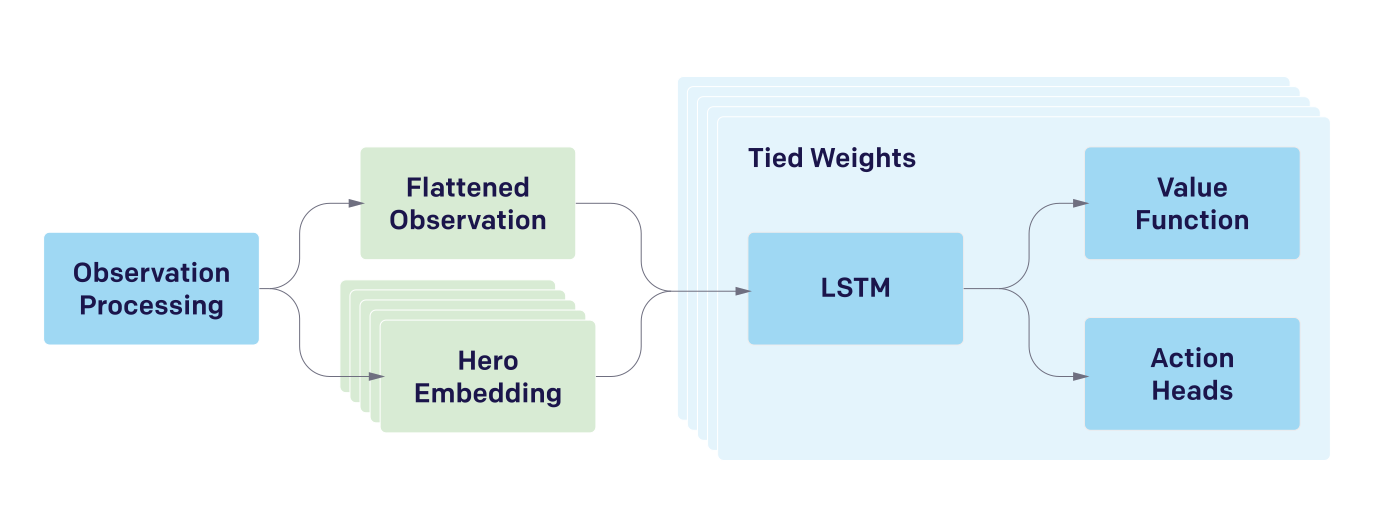

For playing the multi-agent game Dota 2, several old ideas from reinforcement learning were applied in a new way. Firstly, due to the size and complexity of Dota 2 and its action space, reinforcement learning architectures were scaled up to work on thousands of GPU’s over months. In fact the Dota 2 team dubbed “Open AI Five” trained for 180 days. This compared to AlphaGo used 50-150 times larger batch size, 20 times larger model, and 25 times longer training time. For this the primary training block was a 4096 unit LSTM (Long Short Term Memory) architecture. Each of the five heros on the team was controlled by a replica of this network with almost identical inputs, each with its own hidden state. The network’s output varies by hero, as given in the input. The input for the neural network is the same, due to the property of Dota 2 that the “fog of war” is nearly identical for every teammate within a team. The training data was collected from every hero and stored in a replay buffer, where after the game the neural network was optimized.

From other famous AI algorithms applied to game play such as AlphaGo, self play was used heavily in this experiment. This enables the algorithm to be able to be able to train for long time horizons and to play many games in a short time span. Also this enables training from both teams to be used to optimize the algorithm so that every subsequent match both teams would be better and the challenge for each team would be appropriately increased.

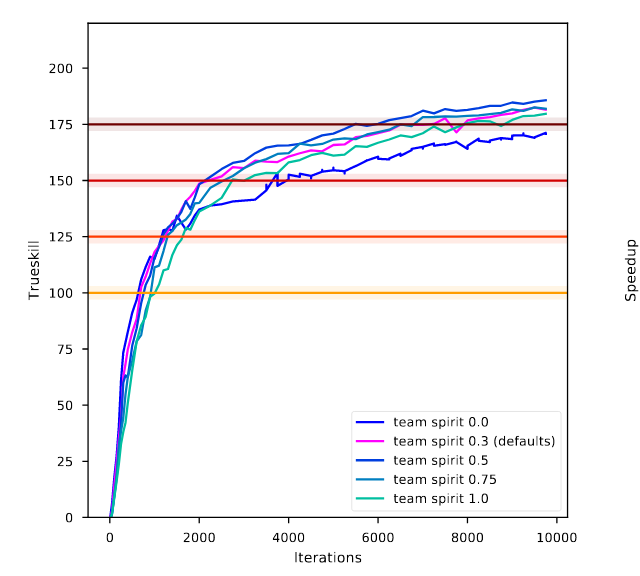

The problem of credit assignment is tricky for multi-agent reinforcement learning algorithms, because you need to determine which actions taken by which players produce the most reward. This paper uses a dense reward function for each hero (characters dying, resource collection, etc.) to make it easier to assign credit to multiple agents. The problem with using this though is that you can run into a problem where this can backfire and add more variance if an agent receives a reward when another agent does a good action. An innovative measure used in the reward function is “Team Spirit”. Team Spirit measures how much agents on the team share in the spoils of their teammates. If each hero earns raw individual reward $\ro_i$ and team spirit is $\tau$, then the hero’s final reward is computed by: If the team spirit’s 0, then every hero is for themself, and each hero only receives reward for their own actions or $r_i = \ro_i$. If the team spirit is 1, then every reward is split evenly amongst all five heros. For a team spirit $\tau$ in between, the team spirit-adjusted rewards are interpolated linearly between the two. Since the goal of this is to have the team win, a team spirit of $\tau=1$ was chosen. However, lower team spirit reduced gradient variance early in training, meaning that it was easier for agents to learn how good their individual actions are. For this reason, it was chosen in this implementation to start with a low team spirit, and then once players could individually play the game, teach them how to play together with a higher team spirit.

Other Applications

Some real life scenarios where multiagent cooperation is essential include search and rescue, a swarm of drones flying together, and autonomous cars reducing traffic. In each of these, a seperate agent wants to maximize the reward they can obtain by fulfilling an objective, but the average reward of each agent can be maximized by agents working together and cooperating to accomplish the overall task. This work built off the methods used in other papers such as AlphaGo for learning how to play Go and AlphaStar, which learned to play Starcraft 2. Additionally, other advances have been made for AI to play Quake 3 in a multiplayer setting with both human and fellow AI teammates. It is exciting to see progress being made in this area that requires AI agents to work together in a team over long time horizons. This work has profound impact in that it can be applied to other real life important problems as mentioned that can save human lives and improve quality of life.